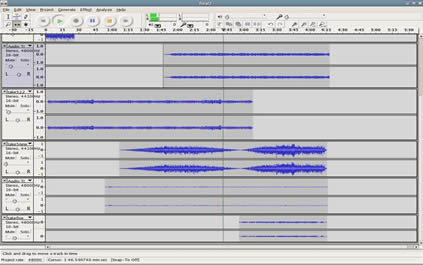

A Musical Composition Tool

So far we have dropped music tracks into Pro Tools like any other kind

of audio file. We can create fade-ins and fade-outs to allow seamless

transitions from one sound to the next, from one musical passage to

the next, manipulating the audiences focus and emotion.

We have also learned that we can drop in small musical passages to actually

"compose" a larger piece of music by sequencing smaller musical

passages, overlapping and transitioning music, and even arranging different

instrument combinations in a "multi-track" scenario. By using

a project tempo, Pro Tools can manage the tempo of a project with a grid

and allow segments of sound, single events and even single notes, to

be dropped into the musical construction.

This is very handy for creating a multitude of mixes, variations; thematically

related segments that can be later used in interactive environments.

Also, smaller segments of music can be repeated, or "looped"

to create a larger musical experience from fewer data assets. The use

of these smaller componants can then be used more effectively for interactive

media titles.

When working with Pro Tools, the musical structure is actually easier. Loops are imported at project tempo and the length is assumed to be a division of that tempo, usually 4 beats. The pitch can be adjusted by right-clicking on the loop region or by using the + or - keys on the number pad.

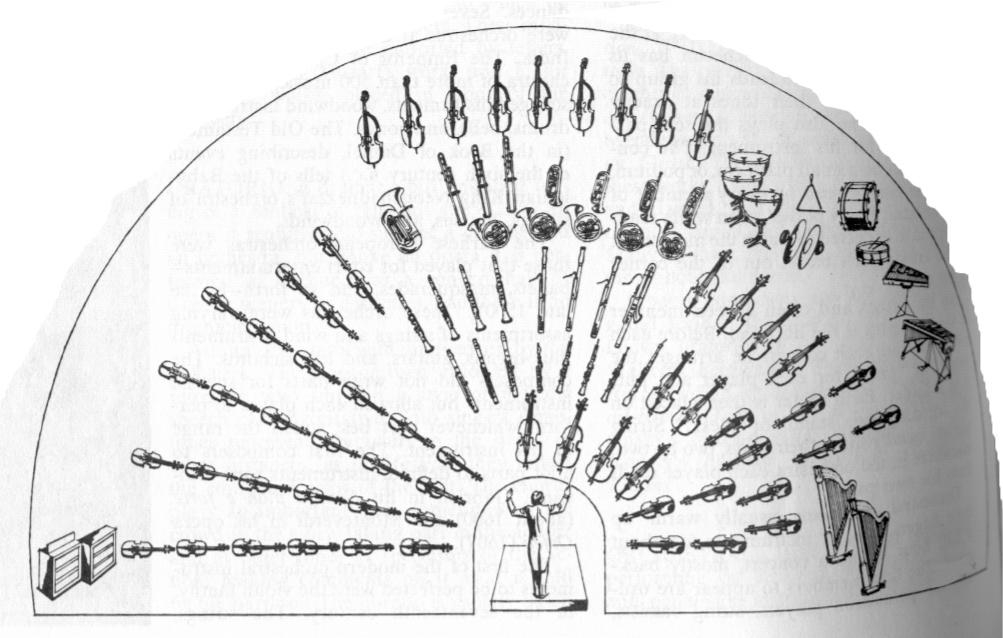

Mixing Tip

The following are a couple of visual guides for using the "pan"

feature in the Audacity mixer when setting up a multi-instrumental mix.

Note that the orchestra map is from the perspective of the conductor

and the audience; while the drum kit is from the perspective of the

drummer (reverse for the audience).

panning guide for instrument placement in an orchestral mix

panning guide for drums and cymbal placement

for a multi-mic drum mix

Remember, the panning feature for each channel can be automated in the editing window, and shown as an envelope just like volume, bus send, or plug-in parameter. By "drawing" the pan values across the track editor, you can create very complex spatialization movement for each track. Normally this is applied to placing sound effects to the side of, or off screen when accompanied by visuals. But in a musical mix, passages of lead guitar or synthesizer can be greatly affected by dynamic panning; i.e. "hocketing" left and right to a beat.

MIDI

(Musical Instrument Digital Interface): MIDI file format

Acid differs from Vegas on a couple of different levels. They both deal

with digital audio but Audacity is based on compositing streams and events

of digital audio; while Acid builds an audio experience from repeating

loops of sound, generally rythm. Acid also supports the world of MIDI,

which allows direct control of external synthesizer instruments, both

hardware and software. This difference is like comparing musical notation

or word processing, to an actual recording of a musical performance

or a picture of a document. In both programs you can deal with a stream

or a segment of large, and sometimes cumbersome digital audio.

On the other hand there is MIDI and the hybrid use of locally installed audio assets. Streaming the low cost protocol of MIDI data over a web connection has been proven to be a workable solution for playing music in games or on the net. With installed high-end sample cards or newer wave-table based software synthesizers running in memory, the sound of the Net can be not only pleasant and entertaining, but interactive and innovative. A hybrid of these technologies and the existing streaming audio technologies is the current game plan for making the Internet the new arena for interactive entertainment. Though quality seems to take yet another step backward, some say the interaction of the greater many out-weighs the quality experience of the few.

One of the most common questions in producing games and multimedia for digital technology is "What about MIDI?" Rarely has there been a more used, abused, misused and misunderstood term. To those of us who have been living and breathing MIDI from its beginnings in 1983, it seems almost comical that a specification that was born from musicians' common needs could turn into such a buzz word and power tool for the new media. To quote the MIDI Manufacturers Association, "MIDI - the Musical Instrument Digital Interface- is computer code which enables people to use multimedia computers and electronic musical instruments to create, enjoy and learn about music." One mission of the organization, among other things, is to promote the fact that "MIDI is also the primary source of music in popular PC games and CD-ROM entertainment titles."

Understanding the fundamentals of music synthesis is the fist step in understanding the need and applications of MIDI. The simple tone module of a sound source, sound modifiers, and control input is the basis for all digital sound source. Manipulation of the ADSR (attack, decay, sustain, release) generators is primary in what gives each synthesized sound it's individual characteristic. The control of pitch and expression in a multi-timberal setting is where MIDI control comes into play.

On the creative side of software production, the two most specialized needs that have called for outside talent are the practical knowledge of the MIDI specification and digital audio techniques. If the difference between these two forms of digital sound can be expressed, it would be the difference between the punched paper roll of an old-time player piano and an actual tape recording. Another way to look at it is, a MIDI file is the numbered sketch for a "paint-by-numbers" painting and a digital audio file is a photograph of that painting.

But just what are the practical applications for this thing called MIDI? It is the way two musical instruments from different manufacturers can be made to talk to one another, control each other, and function as parts of a larger system. It is a way to create a local area network of musical and audio modules under the control of a central server and/or controlling workstation. It is a way to record all activity on this network in a time-based language that can be edited in non-real-time, with a number of different graphic interfaces. It is also a way to create a musical score so accurate, and with such detail and expression that it is virtually identical to a live performance or professional recording. All this, and it is still a computer protocol that is usable or convertible to producing sound and music on virtually every form digital home entertainment from game consoles to personal computers.

MIDI is both a computer language and a musical language that has successfully migrated from the isolated labs of experimental electronic music, to the highly lucrative fields of musical instrument manufacturing and merchandising, to the professional stages and production studios of the broadcasting and recording industries, and finally to the home studios and living rooms of the media consuming public. How a relatively simple system of control can be so widespread and transparent, and yet so misunderstood is perhaps the mystery of rocket science itself.

A Need For A Musical Instrument Standard

Long before there were personal computers, sequencers and stacks of interface-less modules, there was the synthesizer. As mentioned in the previous part of this book, the early synthesizers were strictly collections of analog circuits cleverly designed to squeeze out as many flavors of noise as possible. As digital memory was developed these analog systems were enhanced with the ability to capture and retain series of patch information allowing the musician to instantaneously switch from one meticulously programmed sound to another. These were still analog synthesizers but had become hybrid systems under digital control.

Stepping back to the truly modular synthesizer systems, when every function was a separate module (oscillators, filters, controllers, etc.), there was an infinite versatility in being able to patch these components together in any order or combination. The functionality of one control source could yield desirable results controlling the unusual or seldom used parameter of another module. As synthesizer systems became streamlined in their manufacturing, and standardized in their configuration of components, there was an attempt to maintain some openness in their design by allowing the keyboard, sequencer and other control functions to have output jacks on the casing. Likewise, there were input jacks allowing similar control to come from external gear to control parameters within the system.

This was indeed a gracious gesture on the part of the instrument manufacturers with the one big catch. The analog, voltage-based specifications for connecting these systems were specific as per the manufacturer. In other words, though all systems were using similar technology, the keyboard output from one make of synthesizer would not be compatible with the control input on a synthesizer of another company. For professional musicians that relied on a variety of instruments to create their sound, this was a major inconvenience.

Though some third party systems were available to translate control voltages from one to another, there rarely could accommodate more than a couple of makes, and there few interfaces or synchronization boxes that could match all of them. With the advent of stand-alone dedicated hardware sequencers, this became more than an inconvenience, it was a major frustration. As the concept of the software sequencer loomed on the horizon, it became evident to the heads of the musical instrument industry that something had do be done.

The addition of the personal computer to the multi-instrument control formula pointed to the need for this new standard to be a digital based protocol that could be configured to all applicable electronic devices: musical instruments, signal effect boxes, studio mixers and recorders, and even live-performance technology such as lighting. With the existence of this protocol as a computer language, it would be usable in all digital processing environments and versatile to be generated and edited in an infinitely creative environment: that of computer software.

Equating digital control to the established technology of musical synthesizers was a fairly easy connection in the light of the several hybrid systems already using digital technology for parameter management and patch memory storage. Although the standards of volts-per-octave and plus/minus values differed from manufacturer to manufacturer, regarding the way pitch/frequency was controlled, when translating the incremental pitch control to the digital world, it was obvious that there were very few ways to accomplish this. The establishment of the extended 128 step keyboard as a structure for addressing pitch in this digital specification became the backbone of the MIDI standard. Beyond that, the definition of an open number of different kinds of controls and the agreement to support up to 16 channels per network connection contributed to a workable standard that could be widely implemented in the electronic music world.

At this point, it is very rare to find any computer platform that does not have provisions for supporting the MIDI specification, either with an add-on MIDI interface or actually including it in the hardware. Also, virtually every electronic music instrument released since the specification, with the exception of very low-end consumer products, has included a MIDI interface and some level of functionality allowing it to communicate with the outside world. Once the leading musical instrument manufacturers agreed on these specifications, a cross-platform network protocol was established for music years before anything like it was a reality for the general desk-top computer industry.